All questions related to Monocular Depth Estimation Challenge can be asked in this thread.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Monocular Depth Estimation Challenge

- Thread starter Andrey Ignatov

- Start date

There are some questions about the data.

1. How does the depth generated, by radar, tof, or calculated by two cameras?

2. When I take the first glance at the depth data, I suppose it is calculated by two cameras. However, why some of the sky has the depth ground truth while other part is black?

1. How does the depth generated, by radar, tof, or calculated by two cameras?

2. When I take the first glance at the depth data, I suppose it is calculated by two cameras. However, why some of the sky has the depth ground truth while other part is black?

These values are distance in millimeters (i.e., 1000 = 1m).The depth given in train data is in uint16. The depth ranges from 0 to 65536. What does the depth value mean in the real world?

The images were collected using a stereo ZED camera.1. How does the depth generated, by radar, tof, or calculated by two cameras?

Each depth estimation method has its own working range (min and max distance to the object). Additionally, for some objects like the sky, the distance cannot be measured by any method as it is technically infinite. In these cases, the resulting distance values are replaced by zeros and should be ignored both during the training and validation steps.However, why some of the sky has the depth ground truth while other part is black?

How to evaluate the results of the prediction? by use PSNR and SSIM?

Yes, by using RMSE, si-RMSE, log10 and rel losses. We are also preparing an additional tutorial for this challenge now.

so, where can i see the tutorial?Yes, by using RMSE, si-RMSE, log10 and rel losses. We are also preparing an additional tutorial for this challenge now.

I get an error like this in the performance evaluation. Even when I submit with the depth png in the train set, I get the same error. I guess there is something wrong in the evaluation system.

WARNING: Your kernel does not support swap limit capabilities or the cgroup is not mounted. Memory limited without swap.

Traceback (most recent call last):

File "/tmp/codalab/tmpHNjjjw/run/program/evaluation.py", line 104, in

compute_psnr(ref_im,res_im)

File "/tmp/codalab/tmpHNjjjw/run/program/evaluation.py", line 41, in compute_psnr

_open_img(os.path.join(input_dir,'ref',ref_im)),

File "/tmp/codalab/tmpHNjjjw/run/program/evaluation.py", line 24, in _open_img

h, w, c = F.shape

ValueError: need more than 2 values to unpack

WARNING: Your kernel does not support swap limit capabilities or the cgroup is not mounted. Memory limited without swap.

Traceback (most recent call last):

File "/tmp/codalab/tmpHNjjjw/run/program/evaluation.py", line 104, in

compute_psnr(ref_im,res_im)

File "/tmp/codalab/tmpHNjjjw/run/program/evaluation.py", line 41, in compute_psnr

_open_img(os.path.join(input_dir,'ref',ref_im)),

File "/tmp/codalab/tmpHNjjjw/run/program/evaluation.py", line 24, in _open_img

h, w, c = F.shape

ValueError: need more than 2 values to unpack

Hi were you able to submit your predictions?I get an error like this in the performance evaluation. Even when I submit with the depth png in the train set, I get the same error. I guess there is something wrong in the evaluation system.

WARNING: Your kernel does not support swap limit capabilities or the cgroup is not mounted. Memory limited without swap.

Traceback (most recent call last):

File "/tmp/codalab/tmpHNjjjw/run/program/evaluation.py", line 104, in

compute_psnr(ref_im,res_im)

File "/tmp/codalab/tmpHNjjjw/run/program/evaluation.py", line 41, in compute_psnr

_open_img(os.path.join(input_dir,'ref',ref_im)),

File "/tmp/codalab/tmpHNjjjw/run/program/evaluation.py", line 24, in _open_img

h, w, c = F.shape

ValueError: need more than 2 values to unpack

I appears the SISR evaluation is being run rather than for depth estimation, hopefully it will be fixed soon by the organisers.

Last edited:

The validation server is up and running.

We've updated the evaluation scripts on the server and some details.

The main ranking measure is Score1 (si-RMSE). Score 2(RMSE) is provided for reference.

The scoring scripts we are using are provided here.

Please check them carefully as we ignore far or undefined pixels (according to the ground truth).

I've rerun the latest submissions of

zhyl

Minsu.Kwon

Parkzyzhang

Only the successful submissions count towards the maximum number of allowed submissions.

Should you have questions please let us know.

We've updated the evaluation scripts on the server and some details.

The main ranking measure is Score1 (si-RMSE). Score 2(RMSE) is provided for reference.

The scoring scripts we are using are provided here.

Please check them carefully as we ignore far or undefined pixels (according to the ground truth).

I've rerun the latest submissions of

zhyl

Minsu.Kwon

Parkzyzhang

Only the successful submissions count towards the maximum number of allowed submissions.

Should you have questions please let us know.

Last edited:

so, where can i see the tutorial?

We released a tutorial demonstrating how to train a simple U-Net model on the considered depth dataset, please find it using the following link:

https://github.com/aiff22/MAI-2021-Workshop/tree/main/depth_estimation

Additionally, you can find the implementation of the RMSE, SI-RMSE, LOG10 and REL metrics in Python and TensorFlow in the same repository.

Hi, the submitted results before can be seen on the leaderboard.The validation server is up and running.

We've updated the evaluation scripts on the server and some details.

The main ranking measure is Score1 (si-RMSE). Score 2(RMSE) is provided for reference.

The scoring scripts we are using are provided here.

Please check them carefully as we ignore far or undefined pixels (according to the ground truth).

I've rerun the latest submissions of

zhyl

Minsu.Kwon

Parkzyzhang

Only the successful submissions count towards the maximum number of allowed submissions.

Should you have questions please let us know.

But the new submitted result fails with the following error code

WARNING: Your kernel does not support swap limit capabilities or the cgroup is not mounted. Memory limited without swap.

Traceback (most recent call last):

File "/tmp/codalab/tmpbZJhsA/run/program/evaluation.py", line 19, in

from myssim import compare_ssim as ssim

File "/tmp/codalab/tmpbZJhsA/run/program/myssim.py", line 6, in

from numpy.lib.arraypad import _validate_lengths

ImportError: cannot import name '_validate_lengths'

Hi,

Please check your successful submission and the one you just submitted.

Your output png files should be of the expected format, the same as found in the ground truth depth images.

Please check your successful submission and the one you just submitted.

Your output png files should be of the expected format, the same as found in the ground truth depth images.

Hi,Hi,

Please check your successful submission and the one you just submitted.

Your output png files should be of the expected format, the same as found in the ground truth depth images.

I have just checked the two results and I did not find a difference in format.

See the error code. The error is that your numpy version is too high and you should use numpy <= 1.15.0

See https://github.com/numpy/numpy/issues/12744Hi,

Please check your successful submission and the one you just submitted.

Your output png files should be of the expected format, the same as found in the ground truth depth images.

Hi,Hi,

I have just checked the two results and I did not find a difference in format.

See the error code. The error is that your numpy version is too high and you should use numpy <= 1.15.0

I met the same problem when I submitted results. TAT

Will the failed ones be ignored

Yes, failed submissions are not counted.

I met the same problem when I submitted results.

Make sure that you are submitting the results in the correct format (single-channel 16-bit grayscale images).

Right now, it's OKYes, failed submissions are not counted.

Make sure that you are submitting the results in the correct format (single-channel 16-bit grayscale images).

Range of depth, valid/invalid depth in ground-truth:

Dear Organizers,

I was checking the ground-truth depth maps in the training dataset. I came across several images in which sky depth is not invalid but rather it has values like 35000 or so.

However, similar depth values (35000 or so) are also assigned to far away objects, such as walls in some images.

For e.g., check out images 120.png and 131.png, the sky region in image 120.png has depth value like 37000, whereas the distant wall in image 131.png also has depth values like 37000.

I was expecting that sky regions will contain the invalid depth label, i.e., 0 but that is not the case in many images. I am wondering how can we train the DNN properly with such depth labelling or I am missing something? Please clarify.

Thank you,

Kunal

I am wondering how can we train the DNN properly with such depth labelling or I am missing something? Please clarify.

Since this is a real, not synthetically generated dataset, some measurements might not be completely accurate. However, the percentage of failures is anyway relatively small (less than 10-15%), thus your model should be robust enough to pick up the main mapping function. The results from other challenge participants show that there shouldn't be any problems with this.

Additionally, you are also free to use any other dataset for pre-training your model, this should be just indicated in your final report.

There are some questions about the final submitted tflite model.

1. Since the resolution of the train / val data is 480x640, whether is the input of the tflite model 1x480x640x3 or 480x640x3? Whether is the input data type is float32 or unsigned int8? (We found it hard to generate a tflite model with 480x640x1 in unsigned int8 format and it's easy to get a 1x480x640x1 in float32 format)

2. Whether is the input image in RGB or BGR?

3. Whether is the resolution of the tflite output 480x640x1 or 480x640?

1. Since the resolution of the train / val data is 480x640, whether is the input of the tflite model 1x480x640x3 or 480x640x3? Whether is the input data type is float32 or unsigned int8? (We found it hard to generate a tflite model with 480x640x1 in unsigned int8 format and it's easy to get a 1x480x640x1 in float32 format)

2. Whether is the input image in RGB or BGR?

3. Whether is the resolution of the tflite output 480x640x1 or 480x640?

Last edited:

Whether is the input data type is float32 or unsigned int8?

Float32.

2. Whether is the input image in RGB or BGR?

3. Whether is the resolution of the tflite output 480x640x1 or 480x640?

Edited: The size of the model's input tensor should be [1x480x640x3], RGB images are used as an input.

Last edited:

The input is [1x480x640x1], so that the input is in GRAY color ?Float32.

The size of the model's input tensor should be [1x480x640x1].

Dear organizer,

I have a question regarding runtime of the tflite model.

I've tested the runtime of the model on both runtime check website (http://lightspeed.difficu.lt:60001/) and my android phone(LG G7+ ThinQ).

The resulting inference time differs greatly (took much longer on raspberry 4 than on the phone). Should I consider the inference time on raspberry 4 as a correct runtime?

I have a question regarding runtime of the tflite model.

I've tested the runtime of the model on both runtime check website (http://lightspeed.difficu.lt:60001/) and my android phone(LG G7+ ThinQ).

The resulting inference time differs greatly (took much longer on raspberry 4 than on the phone). Should I consider the inference time on raspberry 4 as a correct runtime?

Last edited:

The resulting inference time differs greatly (took much longer on raspberry 4 than on the phone)

Yes, this is fine, Raspberry's CPU is much less powerful compared to the Snapdragon 845 in the LG G7 phone.

Will this formula be the final ranking formula and remain unchanged?

Yes, this formula will remain unchanged.

jiaoyangyao

New member

Hi Andrey, I'm facing problem when uploading my model to online testing portal http://lightspeed.difficu.lt:60001/.

I would like to know if there are restrictions on the tflite models for testing? Maximum file size, tensor operations, tensorflow version, input layer shape, etc. I already tested my tflite file in other tools and they performs fine.

Thank you!

I would like to know if there are restrictions on the tflite models for testing? Maximum file size, tensor operations, tensorflow version, input layer shape, etc. I already tested my tflite file in other tools and they performs fine.

Thank you!

Hi, for the Factsheet_Template_MAI2021_Challenges can a word template please be provided?

it would allow greater & easier submissions

now cvpr also provides word templates: http://cvpr2021.thecvf.com/node/33#submission-guidelines

it would allow greater & easier submissions

now cvpr also provides word templates: http://cvpr2021.thecvf.com/node/33#submission-guidelines

Is there an extension of final test phase and final submission deadline?

The deadline for the final submission is March 21, 11:59 p.m. UTC, it will not be extended.

why my tflite can run in my PC,but when i commit my tflite to the online website, it failed?

I already tested my tflite file in other tools and they performs fine.

Are your models running fine with AI Benchmark?

Hi, for the Factsheet_Template_MAI2021_Challenges can a word template please be provided?

Unfortunately, we are using LaTeX templates only as TeX is the standard format used by all publishers. It is really easy to work with it, please refer to this or this tutorial to learn all TeX basics. You can also edit this fact sheet template online in Overleaf.

yes,i can running with AI Benchmark,but it sometimes can running in online website,sometimes not, what is the reason for it?The deadline for the final submission is March 21, 11:59 p.m. UTC, it will not be extended.

Are your models running fine with AI Benchmark?

Unfortunately, we are using LaTeX templates only as TeX is the standard format used by all publishers. It is really easy to work with it, please refer to this or this tutorial to learn all TeX basics. You can also edit this fact sheet template online in Overleaf.

jiaoyangyao

New member

Yes, my model can run in AI Benchmark apk in both CPU and TFLite GPU modesAre your models running fine with AI Benchmark?

The test phase has started, but it seems that test data hasn't been uploaded yet.

Check the email sent to all challenge participants yesterday.

yes,i can running with AI Benchmark

Yes, my model can run in AI Benchmark apk

Ok, send me the links to your models by PM.

this is my model link https://drive.google.com/file/d/1STNygyY-0HznnxClnWmgFrKTQDfe9iSr/view?usp=sharingCheck the email sent to all challenge participants yesterday.

Ok, send me the links to your models by PM.

this is my model link

There was probably a connection timeout - we checked, this model is running fine with lightspeed.

Dear organizer,

you mentioned in the email that only the 'model.tflite' should be submitted, and the provided model will be applied to test images.

Does that mean the output tensor will directly be evaluated with gt depth? or will it be saved to .png image first and then evaluated?

BTW, the link to download the factsheet does not work.

you mentioned in the email that only the 'model.tflite' should be submitted, and the provided model will be applied to test images.

Does that mean the output tensor will directly be evaluated with gt depth? or will it be saved to .png image first and then evaluated?

BTW, the link to download the factsheet does not work.

Does that mean the output tensor will directly be evaluated with gt depth?

Yes.

BTW, the link to download the factsheet does not work.

Probably you need VPN for downloading it, I'm also attaching this factsheet template below.

Attachments

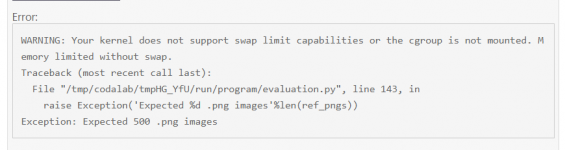

I have the same error.View attachment 36when i commit final test it happened,why ?

do you know how to solve it?I have the same error.

No, I have no idea. Perhaps the organizer has.I have the same error.

if you succeed commit ,please tell me how to do it, thank you !No, I have no idea. Perhaps the organizer has.

Hi Andrey.Yes.

Probably you need VPN for downloading it, I'm also attaching this factsheet template below.

I followed the instruction in the factsheet and submit the upload zip on CodaLab. But an error occurs while there's no images in the uploaded zip according to the factsheet and the email before.

Could you help check the evaluation program?

WARNING: Your kernel does not support swap limit capabilities or the cgroup is not mounted. Memory limited without swap.

Traceback (most recent call last):

File "/tmp/codalab/tmp4Xkylc/run/program/evaluation.py", line 143, in

raise Exception('Expected %d .png images'%len(ref_pngs))

Exception: Expected 500 .png images