You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

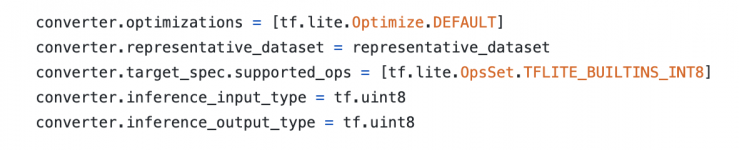

TF Lite quantization

- Thread starter qiuzhangTiTi

- Start date

Hi @qiuzhangTiTi,

1. What TensorFlow version are you using?

2. Is the

1. What TensorFlow version are you using?

2. Is the

experimental_new_converter / experimental_new_quantizer enabled?qiuzhangTiTi

New member

1. TF2.3Hi @qiuzhangTiTi,

1. What TensorFlow version are you using?

2. Is theexperimental_new_converter/experimental_new_quantizerenabled?

2. Yes.

I found the problem and it seems "depth_to_space" op doesn't support uint8 input quantization. But I can feed float32 (0~255) input to the quant model. But it is extremely slow on PC.

1. TF2.3

You need to update to TensorFlow 2.4 first.

Almost every TF version contains a number of critical model quantization issues. Since TF / TFLite teams have some great problems with a meaningful strategy here, they prefer to change the entire quantization logic in almost every TF release. As a result, the same quantization code might produce a model with floating-point input / output nodes, inserted quantize / dequant ops, or even completely corrupted network when you use different TF builds. The provided code was developed and tested with TF 2.4 as this is the latest official build supporting the majority of key TFLite ops, and it is possible to get almost fully-quantized model using it. If you have some issues with updating to this version - you can just create a separate python virtual environment with tf-cpu-2.4 and use it only for model conversion.

2. Yes.

As was mentioned in the tutorial, you should try to convert your model both with the new MLIR and the old TOCO converters as the resulting model will also be different in these two cases for the majority of architectures. I guess the old TOCO converter (

experimental_new_converter = False) might be also a solution to this problem.However, after I print( input_details[0]['dtype'] )

One additional tip - you can use Netron for visualizing your TFLite model and checking the type of all its nodes. It's really convenient.

qiuzhangTiTi

New member

Sounds great. Thanks.You need to update to TensorFlow 2.4 first.

Almost every TF version contains a number of critical model quantization issues. Since TF / TFLite teams have some great problems with a meaningful strategy here, they prefer to change the entire quantization logic in almost every TF release. As a result, the same quantization code might produce a model with floating-point input / output nodes, inserted quantize / dequant ops, or even completely corrupted network when you use different TF builds. The provided code was developed and tested with TF 2.4 as this is the latest official build supporting the majority of key TFLite ops, and it is possible to get almost fully-quantized model using it. If you have some issues with updating to this version - you can just create a separate python virtual environment with tf-cpu-2.4 and use it only for model conversion.

As was mentioned in the tutorial, you should try to convert your model both with the new MLIR and the old TOCO converters as the resulting model will also be different in these two cases for the majority of architectures. I guess the old TOCO converter (experimental_new_converter = False) might be also a solution to this problem.

One additional tip - you can use Netron for visualizing your TFLite model and checking the type of all its nodes. It's really convenient.

deepernewbie

New member

Same here

TFlite post quantization fails with

Quantization not yet supported for op: 'DEPTH_TO_SPACE'

using tensorflow 2.4.1

Edit:

installed tf-nightly as well now it works however whenever I select int8 from ai-benchmark application and try to run the model the application crashes

TFlite post quantization fails with

Quantization not yet supported for op: 'DEPTH_TO_SPACE'

using tensorflow 2.4.1

Edit:

installed tf-nightly as well now it works however whenever I select int8 from ai-benchmark application and try to run the model the application crashes

Last edited:

whenever I select int8 from ai-benchmark application and try to run the model the application crashes

Can you please send me the link to your model by private message here?

deepernewbie

New member

Please see my other post in "Real-Time Image Super-Resolution Challenge" topic, where I included a simple model with Depth_to_spaceCan you please send me the link to your model by private message here?